Pack Mule

TF Site Team

- Joined

- Jan 24, 2013

- Messages

- 3,749

- Location

- USA

- Vessel Name

- Slo-Poke

- Vessel Make

- Jorgensen custom 44

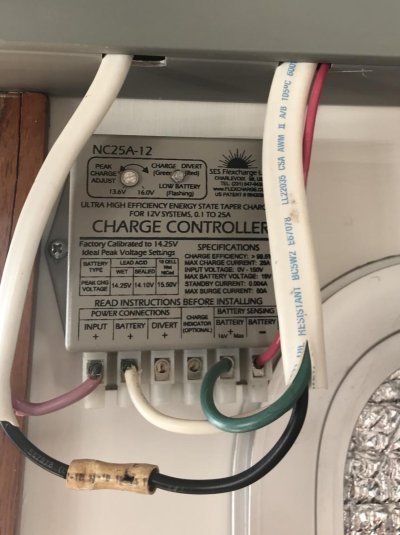

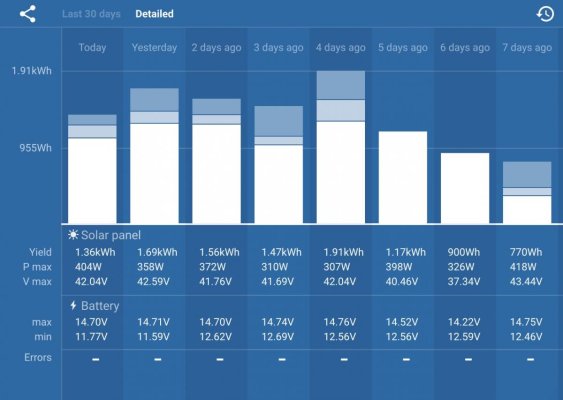

Since this thread was already started I have a question about diodes. I notice yesterday that my diode at the solar panel was broke or blown. What size diode should I be using for one 120 watt solar panel? This is the second time over the last 5 years. The location it's in is not the best and out in the elements. Also how close does it need to be to the solar panel?